“Any sufficiently advanced technology,” wrote Arthur C. Clarke, “is indistinguishable from magic.” It is indeed hard not to feel you’re in the presence of magic when you first encounter Suno—an AI-powered website that lets you generate original songs in seconds. Try it for yourself or watch a YouTube demo, and you’ll surely agree that its outputs across every genre and style are uncannily effortless, bordering on impossible.

Once you wipe the stars from your eyes and start to grapple with actually using this technology, though, a font of questions bubbles to the surface—both practical and philosophical. I discovered this firsthand last weekend,1 when I spent three consecutive days making three original AI-generated songs.

In a just a moment, I’ll link the audio and lyrics for these songs. First though, some important context:

I wrote these lyrics myself, with no technological aid beyond a rhyming dictionary.

The album art is 100% AI-generated. (I had no control over the final image other than inputting the lyrics that Suno uses as a prompt; I cannot edit them after the fact.)

The audio you are hearing—melody and harmony, vocals and instrumentation—is 100% AI-generated. That said, it has gone through a heavy selection and curation process. (Much more on this in a moment.)

All these songs are written from fictional viewpoints. (This should be fairly obvious reading the lyrics, but bears mentioning.)

Per Suno’s terms of service, I retain ownership rights to all songs. (Not that I plan to drop an album; however this points to some key ethical questions around AI and ownership I’ll touch on.)

Drumroll Please…

And now, without further ado, I am now pleased to present:

SYLVIA (MADE MY BED) — a singer-songwriter’s tale of infidelity and regret (lyrics).

YOCHEVED’S PRAYER — a pseudo-religious anthem about our earthly existence (lyrics).

I’M NOT GONNA WANDER FAR — a bluegrass banger with a heart of gold (lyrics).

Note that the lyrics are also included with the audio itself; however, they are often corrupted/redundant, for reasons I’ll explain shortly, hence the separate lyrics docs.

A Three-day Suno Crash Course

This is a more detailed walkthrough of the process that led to these songs. To read just the takeaways, scroll to the bottom.

Day 1: Sylvia (Made My Bed)

I wrote the lyrics to “Sylvia (Made My Bed)” in their entirety before entering them into Suno. Having played around with fully AI-generated clips,2 I assumed I’d just be able to dump the words in their entirety into Suno and get a passable output after a few attempts. As it turned out, my lyrics were too long to fit in the text box, cutting off after the first four verses. I figured this wouldn’t be an issue, since I knew Suno had the option to extend clips.

Unfortunately, while the “continue song” option works well for repetitive (and particularly instrumental) tracks, it often caused issues for the more vocals-forward genres I was working with. Specifically, even though “Sylvia” has a very simple structure (verse / verse / chorus x 3), Suno struggled to remember the melody it used from one verse to the next.

(Even in the full, finished version, you’ll notice if you listen closely that the melody wanders from the beginning to the end of the “recording”—a fact that is true of all three songs. While this evolution is sometimes an artful deviation, more often than not it sounds like a form of short-term memory loss.)

A second major challenge was getting Suno to sing my lyrics without messing up their rhythm and inflection. (Again, you’ll notice even in the finished products that I didn’t fully succeed at eliminating this problem.) For example, in this output and this one the vocals enter immediately with strange hallucinated words. I rather like the sound of these two early attempts, but there are some bizarre line breaks. This one was too slow for my taste and also has some strange line breaks—plus the melody of the third verse is totally different than the first two.3

If you’d rather not listen to all those clips, here’s the best explanation I can offer on the page. Consider an input like the words to “Old MacDonald”:

Old MacDonald had a farm

Ee-i-ee-i-oh

And on that farm he had a pig

Ee-i-ee-i-oh

Any normal human reading these lyrics—even if they’d never heard the song before—would still know to group the right words together. Suno, instead, would often generate phrasings like:

Old MacDonald had a farm-ee

I-ee-I-o on that farm he had a

pig-ee-I-ee-I

Oooh oooh.

(Hopefully the nonsensicality of it is coming through—if not, I’d recommend just clicking into some of the clips in the previous paragraph.)

Third, generally speaking, Suno has a bias toward clips in medias res—which is to say the audio sample is often made to sound like it's coming from somewhere in the middle of an existing song, rarely the beginning or end. Concretely, this meant that it was difficult to generate a clip with an instrumental intro before the singer entered, or to get a clip that ended in silence.

All of these challenges—melodic deviation, butchering lyrics/phrasing, and awkward transitions—seemed to fluctuate randomly, such that it was rare indeed that I output a clip with zero disqualifications. I often felt like I was playing a slot machine, crossing my fingers for the three icons to line up. And that was without even considering whether I liked how the clip sounded aesthetically!

So, yeah, there was a lot of trial and error…

Of course, if I’d wanted to, I could have downloaded any of these clips and manually cut and spliced them (e.g., using a program like GarageBand). No doubt, in the not-too-distant future, this editing software will be integrated into Suno or its competitors. For now, though, I remain an intermediate GarageBand user at best—and besides, I wanted to see what I could make within the constraints of the technology. This meant I basically just had three creative levers to pull:

Create a clip, with modifications to lyrics or style input;

Choose whichever of the outputs was most to my liking; and

Continue a clip from the timestamp of my choice.

(Note that in the current version of Suno, as far as I’m aware, there is no way to delete or edit the lyrics after the output is generated. This means, for example, that if I generated Verses 2 and 3 of a song but then decided to continue the song from Verse 2—i.e., lopping off 3 in the audio—the lyrics would ultimately contain Verse 3 twice: once from the original prompt, and once from the prompt for the continuation. This is why, if you look at the lyrics included in the three audio links, there is lots of redundancy. You’ll also notice that this happens less and less with each subsequent song because I learned to generate in smaller/more Suno-friendly chunks.)

Using this create-choose-continue method, I quickly learned that I was unlikely to get more than one good verse at a time. The odds of the stars aligning for more than thirty seconds in any given output were low.

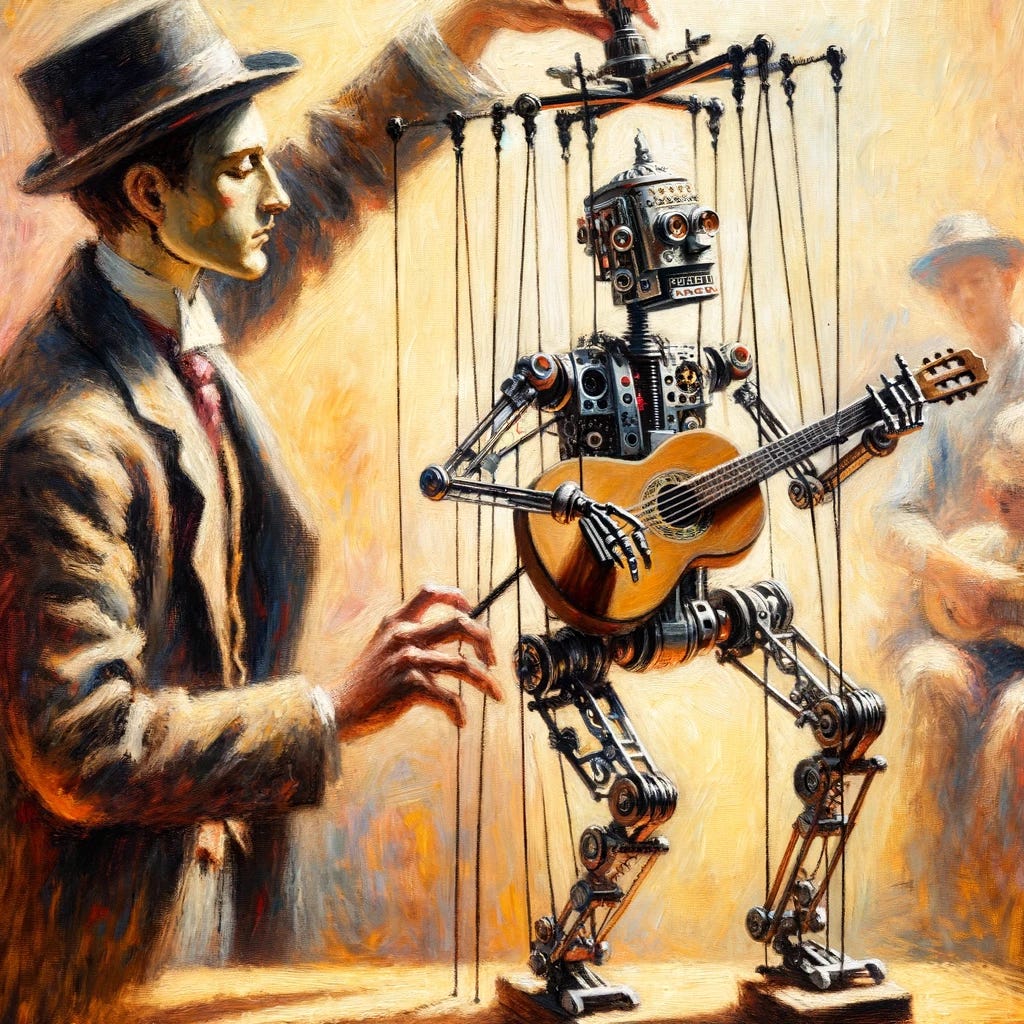

But it wasn’t just button mashing either. By the end, rather than playing a slot machine, it felt like I was using successive approximations to train a smart but chaotic alien; or communicating with a sophisticated agent in its second language, trying to get it to enact my will. There was still a lot of randomness in the output, but by selecting the versions I liked, I was able to nudge the end result toward coherence.

Day 2: Yocheved’s Prayer

“Yocheved’s Prayer” was the only song whose lyrics I already had kicking around my file drawer—the original source being a writing project I’d abandoned years ago, about a (fictional) cult. What made this song unique among the three was that I also already had a simple melody in mind prior to “recording.” This meant that, unlike “Sylvia” and “I’m Not Gonna Wander Far,” I was aiming for something quite specific in my output.

Of course, trying to get Suno to produce what I had in my mind’s ear was a futile exercise, since I had no keyboard or means of inputting notes. Based on a suggestion from the Suno Discord server, I tried feeding it chords; and while it did produce a song in C major as I’d asked for, this was otherwise a total flop as far as I could tell.

(Hilariously, there were several iterations where I got background vocalists singing lines like “resolve to C major,” and once, when I bracketed instructions for a suspended G-chord—demarcated [GSus-4]—Suno sang something that sounded suspiciously like “Jesus, Jesus is strong.”)

Still, I imagine we’re not that far away from a technology where you could sing or play a melody and have AI build out instrumentation around it. (We’ve had tuners that can recognize pitches for a while and now we have Suno to do the generative part; surely A + B = C?) You could envision audio editing programs that, at the click of a button would play back your recording with strings or electric guitar added, or suggest three melodic variations.

I understand how this world might be viscerally repugnant to today’s musicians, who have spent years honing their skill. Personally, though, as someone who grew up playing music but was never a prodigy, I find this future exciting. In the past, my only path to manifesting an idea for a song would have been years of learning how to play an instrument4 or paying for professional musicians and recording services. Now I can do it much more cheaply and accessibly.

Nor, it’s important to point out, is this process devoid of skill. Of course, you need significantly less skill to make a song with Suno than to do the same from scratch—but not zero skill. There is still a process of curation and selection—in my own experience, quite a time-intensive one to get the result I wanted. No doubt if I were a better musician, and better at getting Suno to cooperate, I would have gotten an even better song.

In other words, after a couple days of immersion in the Sunoverse, it occurred to me that making with AI isn’t so so different from what real artists do—it’s just operating at a different level of abstraction, and relying on more brute force solutions. For example, consider this striking clip of Paul McCartney composing the song “Get Back” in about a minute:5

Like Suno, Paul is also trying different variations on his melody. Like me, he is also experimenting and then reacting to how the results sound. The crucial difference is that in Paul’s case, his skill is so astronomically high that his search space is much smaller: Unlike the Suno, he has perfectly honed intuitions about what chord and note combinations are likely to sound good, and unlike me, he doesn’t have to listen through hundreds of audio clips to cull the wheat from the chaff.6

As a practical matter, I did find that my skill with Suno increased a lot through the first couple days. For example, I realized—probably too late—that because the initial output determines the trajectory of the whole of the song, it’s important to generate a lot of options up front. (In particular I wish I’d settled on a different chorus for “Sylvia” :/.) I got better at crafting prompts that would encourage Suno to fill space, or end the song at the right time—e.g., including cues like “Ooh, ooh” or “[soft piano].” I even solved some of the rhythm/inflection problems by modifying verses for more favorable pronunciation. (“Our time is a dove” became “Our time i-is a dove” and eventually “Our time ih-is a dove.”)

Of the three songs I made, I’m probably the least happy with how “Yocheved’s Prayer” came out, but it allowed me to develop some techniques that bore fruit in my next attempt.

Day 3: I’m Not Gonna Wander Far

Picking up from a theme from last post, one notable change from Day 1 to Day 3 is that I got a lot faster with Suno: Whereas I spent a majority of my Saturday working on “Sylvia,” I made the entirety of “I’m Not Gonna Wander Far” in four hours—split evenly between lyrics and “recording.”

I’d written “Sylvia” aiming directionally toward the singer-songwriters I admire most—Jason Isbell, Aimee Mann, Joni Mitchell,7 etc.—and written “Yocheved’s Prayer” imagining a piece that was moving and profound. In the end, the former came out as what one good friend described as “Mumford and Sons core,” while the latter is undeniably schmaltzy. This isn’t to say these songs sound bad (I like Mumford and am a (mostly) unironic fan of Christian music!)—just that they’re more musically conventional than I’d projected. Perhaps this is unsurprising given a technology created from the statistical averages of an immense corpus of music.

In any event, for my third song, I decided to lean into the campiness and try to just make something fun—albeit still with a bit of narrative to it. (I credit Billy Strings’ “Dust in a Baggie” and Pokey LaFarge’s “Two-Faced Tom” as inspirations.) Ironically, I think “I’m Not Gonna Wander Far” actually sounds the least conventional, musically speaking—perhaps because I included the word “bluesy” as a style cue.8

This feels like as good a time as any to talk about copyright and intellectual property, and the short version is: I have mixed feelings. On the one hand, it’s obviously true that Suno is built on the labor and creativity of God knows how many artists. On the other hand, I don’t think these recordings are ripping off any specific artist—any more than it would be a ripoff for me to record a bluegrass song the old-fashioned way. No one owns bluegrass (or punk, lofi study music, etc.)9

Ultimately, I think I would favor whatever laws and norms promote the most creative and compelling cultural products—whatever gets us there—so long as there is not severe harm to artists. I agree with Suno’s (i.e., the company—not the AI) choice to give paying users ownership over what they make: If individual artists don’t own bluegrass or punk or lofi, this feels only fair. In other words, we should distinguish between the right to make/sell generative AI and the right to profit from its outputs. (It seems like we are by default?) Then again, maybe I’m just suffering from an endowment effect.

In any event, one nice benefit of doing an uptempo bluegrass tune—given the current constraints of the technology—is that I could fit more lyrics in any given thirty- or sixty-second clip. This meant I was usually able to guarantee there was at least one verse and chorus in a base segment prior to continuation, which helped to reduce Suno’s short-term memory loss.

In fact, the lyrics-per-second ratio was so efficient that I was even able to include a bridge—the only non-repeated melody in any of the three songs. This bridge may be my single favorite moment in all that I produced, and I think that’s partly due to the freedom I had in generating/selecting it: Because there was no need to about match the bridge to other verses or refrains, I could go nuts with the “Create” button until I got an output liked (that is, assuming Suno didn’t mess up something else in the clip).

Of my three songs, I’m happiest with how “I’m Not Gonna Wander Far” came out; Suno’s indulgent banjo solos aside, this also seems to track with public perception. (For about a day, “I’m Not Gonna Wander Far” hovered around #6 on Suno’s trending page.)

Four Takeaways

These days, it’s become fashionable to speculate about the secret recipe behind generative AI, or worry about the health of a society spoonfed artificial content. For my part, I’m much more interested in the mouthfeel: What is the moment-to-moment experience of making with these tools?

At present, my answer would go something like this:

Working with Suno is like delegating to a prodigy who fails to understand some very basic assumptions about what people like. It rarely makes something that sounds bad—and if it does the badness is usually because it does something overly conventional or showy, never dissonant. Sometimes it surprises you with technically brilliant flourishes or a comical misinterpretation. Given enough tries, it usually gets where you want it to go. [formatted for emphasis—not a quote]

As you continue to push and cajole Suno, you do develop a sense of its characteristic strengths and flaws—its personality, so to speak.10 With this in mind, here are four observations about what generative AI can and will do:

1. Generative AI is locally mimetic but globally myopic.

This, I think, is the crux of my difficulties getting Suno to stick to a consistent melody. Mostly it’s just reacting to the previous twenty or thirty seconds—either repeating those notes exactly or with slight variations.

Suno doesn’t have much discretion about when to repeat exactly and when to evolve (each of which may be preferred at times), so the overall process resembles a game of telephone. If Verse 1 matches Verse 2, Verse 2 matches Verse 3, and Verse 3 matches Verse 4, then, by the transitive property, Verse 1 should match Verse 4. In practice, though, there is the possibility of variance with each translation: You have to be both lucky and disciplined as a curator to maintain consistency.

Ultimately, this telephone game produces an illusion of coherence—but it’s just that: an illusion. Instead of truly remembering the first verse, Suno remembers the memory of the memory of the memory of the first verse. (Again, to be clear, this is based on an almost anthropological observation of its behavior; I don’t claim to know what’s actually happening in its “brain.”)

2. Generative AI’s superpower is riffing.

The upside of this repetition-with-variation tendency is that Suno is extraordinarily good at riffing. It lives in a kind of dreamlike state, in which every moment is natural and effortless response to the previous moment—self-consistent and continuous without an overarching plan.

The results of this riffing are often striking and beautiful—sometimes uncannily so. A good visual demonstration is AI-generated videos like this one from Sora, in which wolf puppies seem to manifest spontaneously yet somehow fluidly:

This dreamlike quality means that, in general, some of the best use cases for generative AI are those involving ideation and aesthetic tweaks: If you provide the right constraints, it will provide the right kind of creativity.

3. Generative AI is good for bad artists and neutral(ish) for good artists.

It’s pretty obvious how AI is good for bad artists: Any asshole can type some words into Suno and have a catchy audio clip in seconds.11 This technology reduces the gap between the floor and the middle.

For good artists, there are tradeoffs. Naturally, with lower barriers to entry, talented musicians will face increased competition from below. But simultaneously, there are surely gains to be found in efficiency and specialization: Everyone will be able to record songs more cheaply and quickly—the best artists most efficiently of all—and it will be possible for people to outsource parts of the process they would previously have had to do on their own.

For example, some musicians are world-class poets and shit singers (looking at you Bob), others famously don’t even write their own lyrics, while a select few are both wordsmiths and warblers. If some artists find the joy in working out the interplay between words and melodies, great! But for those who tend toward one over the other, both the artist and their audience benefit from their being able to specialize. (And again, any artist will benefit from the ability to easily riff on and reconfigure songs, should such technology become widely available.)

This is why I come out as generative AI being more-or-less neutral for good artists: Among those who would have been stars in a previous generation there will be some winners and some losers. But on average, I think improvements in quality and productivity (plus a deep-seated appetite for authenticity) will offset the increased competition from AI-aided newcomers.

4. AI-generated art is not categorically different from human-generated art.

Make no mistake: A world with AI-generated art will still contain human creativity—even if it generally requires less depth of skill. The creativity of this world will simply be imbued at different points in the artistic process, and according to individual preferences.

In the present context, for example, you wouldn’t say that an architect is uncreative because she fails to consider the nuances of interior decorating. Nor would you say a graphic designer is uncreative because he doesn’t know the source code for Photoshop and Canva. The creators in these examples are simply creating from a wider aperture or higher level of abstraction—yet they’re clearly still enacting an aesthetic vision through a process of ideation and selection.

So too when you prompt an AI to make a text, song, or image.

And in a not-too-distant world, individuals will surely be able to choose the exact degree to which they want artificial enhancement of their art. If you record an original demo and have AI autotune it, is that no longer “art”? What if the AI suggests some alternative instrumentation? What if you autogenerate a guitar solo between the third and fourth verse, or the lyrics and melody of half a verse and write the rest yourself? “Art” is a continuum, not a binary.

In some domains, it will of course be more important than in others to have significant human input. Just as it would be bizarre to have ChatGPT write a journal entry or passionate love letter on your behalf, it feels strange to imagine sitting around a campfire singing a song written by Suno. These are fundamentally self-expressive contexts. But if you’re in a club listening to industrial German house, do you really care where the noise came from? The point of that music is utilitarian or (no pun intended) instrumental: to get you thrashing on the dance floor.

Ultimately, I come out cautiously optimistic on generative AI. Of course there are worst-case scenarios for this technology we absolutely want to avoid, and we should strive to balance the dignity of creators with the enjoyment of consumers. But speaking for myself, I know I experienced a strong sense of flow making these songs, and certainly feel they are imbued with my personality and aesthetic choices. It may not be Mozart, but it’s art.

Besides, like it or not, AI isn’t going away anytime soon: You can either learn to work with it or stick your head in the sand. I’ll admit it’s warm down there in the sand, but the acoustics could be better.

Technically, last weekend plus four hours on Monday morning.

Suno has two modes: “standard,” in which the user inputs a top-level prompt and AI generates the rest, and “custom,” in which the user inputs a title, lyrics, and style specifications. (My songs were made using the latter.)

Suno generates two outputs for each prompt—presumably because there is variance in quality and this allows its creators to collect learning reinforcement data from users.

Actually, after playing around with Suno, I’m much more inclined to skill up on guitar than I was before. I think this is another benefit of generative AI: to help us imagine what our art would be if we worked at it.

I’ve used this example before because I think it’s such a perfect microcosm of the creative process.

I’m reminded here of the difference between how computers and humans solve chess. While software can churn through an ungodly number of permutations, the best humans achieve comparable results just through pattern-matching.

Thank God the drought is over!

I suspect that in general, fusions and mashups are a shortcut for nudging generative AI toward more surprising outputs; otherwise it will just go with the wisdom of the crowd.

I’m more sympathetic to the claim that certain cultural groups have special claim to certain art forms because they originated them. But I think this speaks more to who should publicly perform and profit from those art forms than who is allowed to enjoy making them. It also seems inevitable to me, descriptively, that any sufficiently compelling art form will eventually come to be seen as fair game for mainstream use (e.g., the acceptability of white people making jazz, funk, and rap music, in that order.)

I do NOT believe Suno is conscious.

To wit: The catchiest song I’ve made to date is an autogenerated EDM track about a friend's obsession with butter noodles. (NSFW; second verse entered manually.)

OMG!